Appearance

Multi-Region Backend

This document describes how to run the server backend on multiple geographical regions to minimize network latency.

Appearance

This document describes how to run the server backend on multiple geographical regions to minimize network latency.

Understanding how Entities are placed on server instances - To run the Backend on multiple regions, some server nodes must be configured to run on specific regions. Configuring Cluster Topology describes the configuration to run certain entities on certain Nodes.

Multiplayer Entities - Multi-Region support is only supported for Ephemeral Multiplayer entities. See Introduction To Multiplayer Entities how to implement a custom Multiplayer Entity.

IP Geolocation - In order for server to be able to choose a region closest to the Player, Geolocation must be enabled. This process is descibed in Implementing Player IP Geolocation.

Load Balancing - After choosing the region where an Entity should be placed to be closest to relevant Players, the Server must choose a suitable node on the region. Balancing Load Between Nodes describes how to choose most suitable node based on load information.

Self-hosted Infra Stack - Metaplay Platform is currently only offering hosting on eu-west-1 region. To deploy game servers on other regions, you need to provision Kubernetes clusters on these desired edge regions. The recommended way to provision an edge cluster is to use Metaplay's Terraform modules.

Accessing the Repository

Metaplay's Terraform modules are available in the private metaplay-shared GitHub organization. Please reach out to your account representative for access to it.

When using multiplayer entities, the latency from one player performing an action to another player observing it is mostly defined by the network latency of the players. To minimize latency, the game server should be as geographically close as possible to the players. As the Main Cluster can only reside on one region, there is no single region that could provide low latency to all players around the globe.

To mitigate this, the game backend can be deployed in multi-region mode where multiplayer entities can be run on Main Cluster or one of the Edge Clusters closest to the Players' physical location.

In this example, we continue from Balancing Load Between Nodes and deploy this hypothetical Battle entity on both a Main Cluster on eu-west-1 region and an Edge Cluster on us-west-1 region, and then steer players battles to be run on the region closest to them.

We'll start by creating a Kubernetes cluster on the edge region of us-west-1. Follow the instructions in examples/multi-region-game-servers substituting the regions and stack_configs as necessary. After this step, you should have the Edge Cluster created and connected to the Main Cluster.

Accessing the Repository

Metaplay's Terraform modules are available in the private metaplay-shared GitHub organization. Please reach out to your account representative for access to it.

In the previous step, we created the Edge Cluster and connected it to the Main Cluster. This means a Kubernetes cluster is running on the Edge Cluster, but by default no game server nodes (Kubernetes pods) will be placed on this Edge Cluster.

As described in Configuring Cluster Topology, a unit of placement is called a node set. We'll first declare a node set on Edge Cluster to host the Battle entities.

# Environment name and family

environment: my-environment-name

environmentFamily: Development

...

# Enable the new Metaplay Kubernetes operator.

experimental:

gameserversV0Api:

enabled: true

shards:

...

- name: battle-us

nodeCount: 1 # one node

clusterLabelSelector: # place on US cluster

region: us # the name we gave to the edge clusterWith the node set declared on the US region, we'll then allow placement of the Battle entities on it. For this, we'll edit the cloud Server Options file for the environment and add the node set name as an override.

Clustering:

EntityPlacementOverrides:

Battle: ["logic", "battle-us"]Entity Config

This same EntityPlacement can also be declared in the Entity's EntityConfig declaration by setting NodeSetPlacement => new NodeSetPlacement("logic", "battle-us").

We now have set up Battle entities to run on the "logic" and "battle-us" node sets, corresponding to the Main Cluster on the EU region and Edge Cluster on US region. Next, we'll update the Battle creation to steer US players to the US region, and EU players to the EU region.

We'll start by Implementing Player IP Geolocation to map players' IP addresses to geolocations.

With the geolocation set, we'll augment the node selection logic in Balancing Load Between Nodes with a simple location scoring system. Each player on a region gives points to the nodes on the their region, and WorkloadSchedulingUtility chooses the best node within these bounds.

We start by converting the player locations into regions. For this, we'll use continent-level mapping, declared in a new LoadbalancingOptions:

[RuntimeOptions("Loadbalancing", isStatic: true)]

public class LoadbalancingOptions : RuntimeOptionsBase

{

public record struct BattleNodeSet(string[] Continents);

public Dictionary<string, BattleNodeSet> NodeSets { get; private set; }

public override async Task OnLoadedAsync()

{

// Validate the NodeSets match the nodesets given for Clustering

ClusteringOptions clusteringOptions = await GetDependencyAsync<ClusteringOptions>(RuntimeOptionsRegistry.Instance);

ShardingTopologySpec shardingSpec = clusteringOptions.ResolvedShardingTopology;

// Filter out unknown nodesets. This allows defining all possible node sets in base config.

NodeSets = NodeSets

.Where(nodeset =>

{

if (!shardingSpec.NodeSetNameToEntityKinds.ContainsKey(nodeset.Key))

return false;

return true;

})

.ToDictionary();

// Fail to start if there are no configured for battle

if (NodeSets.Count == 0)

throw new InvalidOperationException($"No battle NodeSets defined for current current cluster config. Check NodeSetRegions option.");

}

}Then, define the region mapping in the server config:

Loadbalancing:

# Mapping from nodeset name to their corresponding Continent Code. These

# are not the name of the clusters.

NodeSets:

# In singleton mode (local server), use some region. It doesn't matter

singleton:

Continents: eu

# In Main + Edge mode, mark the Main region's logic node set as EU

# and US-nodeset as US

logic:

Continents: eu

battle-us:

Continents: usNext, we'll use this mapping to convert Battles member locations to continents and then to regions. Note that the approach here works regardless if a Battle has one or multiple members.

async Task<(EntityId, BattleSetupParams)> StartBattleAsync(BattleEntry battleEntry)

{

ClusteringOptions clusterOpts = RuntimeOptionsRegistry.Instance.GetCurrent<ClusteringOptions>();

LoadbalancingOptions loadbalancingOptions = RuntimeOptionsRegistry.Instance.GetCurrent<LoadbalancingOptions>();

ClusterConfig cc = clusterOpts.ClusterConfig;

// Create a new battle entity

EntityId newBattleId = await WorkloadSchedulingUtility.CreateEntityIdOnBestNode(EntityKindGame.Battle,

(nodeInfo, workloads) =>

{

// Find the configuration of this nodeset

NodeSetConfig config = cc.GetNodeSetConfigForShardId(nodeInfo.shardId);

if (!loadbalancingOptions.NodeSets.TryGetValue(config.ShardName, out LoadbalancingOptions.BattleNodeSet battleNodeSet))

return 0;

With the setup above, the Battle entities are run on the nearest region to the player. However, if we were measure the network latency by enabling MultiplayerEntityClientContext.EnableLatencyMeasurement and observing the results in MultiplayerEntityClientContext.OnLatencySample(), we notice that the latency for players on Edge regions has not improved. This is expected because by default, all client traffic is routed via the Main region.

No WebGL support

Direct Connections require raw UDP sockets available. WebGL does not support raw sockets and doesn't support direct connections.

To improve the latency, we can enable direct connections for the multiplayer entity. This allows clients to communicate with the particular entity without the roundtrip via the Main region. We enable this by exposing the necessary ports for the connectivity by updating the deployment's Helm values for all Battle-hosting nodes:

shards:

...

- name: logic

nodeCount: 1 # one node

public: true # allocate a public IP

podPorts: # allow packets on UDP 5455

- containerPort: 5455

hostPort: 5455

protocol: UDP

- name: battle-us

nodeCount: 1 # one node

clusterLabelSelector: # place on US cluster

region: us # the name we gave to the edge cluster

public: true # allocate a public IP

podPorts: # allow packets on UDP 5455

- containerPort: 5455

hostPort: 5455

protocol: UDPAnd we're done! The client will automatically use the direct connection to this entity when available, avoiding the cross-region network latency.

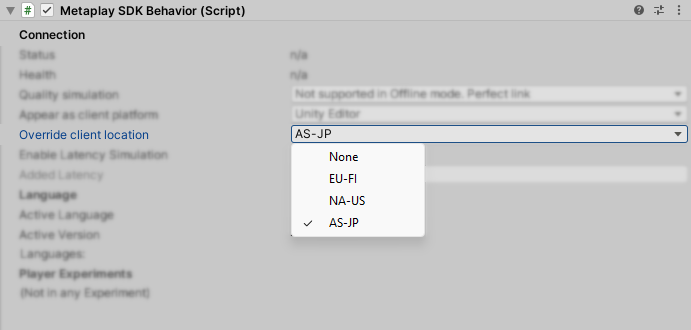

You can use the location spoofing feature on Unity Editor, to test the logic of assigning the player's multiplayer entity to the nearest region.

To inspect UDP traffic in packet captures, you may use the following Wireshark Lua plugin. The plugin is used by pasting this snippet into the Wireshark Lua console and evaluating it, after which network capture can be filtered with filter metaplay.dt. Note that the payload is encrypted and cannot be inspected.

Default Port

The plugin assumes the default port 5455 is used.

-- This snippet is released under GPLv2 License, as

-- required by the Wireshark project for Lua plugins

metaplay_dt_proto = Proto("metaplay.dt","Metaplay Direct Transport")

function metaplay_dt_proto.dissector(buffer,pinfo,tree)

pinfo.cols.protocol = "MDT"

local subtree = tree:add(metaplay_dt_proto,buffer(),"Metaplay Direct Transport")

if buffer:len() <= 28 then

subtree:add(buffer,"Invalid Packet")

else

subtree:add(buffer(0,4),"connection_id: " .. buffer(0,4):le_uint())

subtree:add(buffer(4,8),"counter: " .. buffer(4,8):le_uint64())

subtree:add(buffer(12,16),"auth_tag: " .. buffer(12,16))

subtree:add(buffer(28),"Encrypted Payload (" .. buffer(28):len() .. " bytes)")

end

end

udp_table = DissectorTable.get("udp.port")

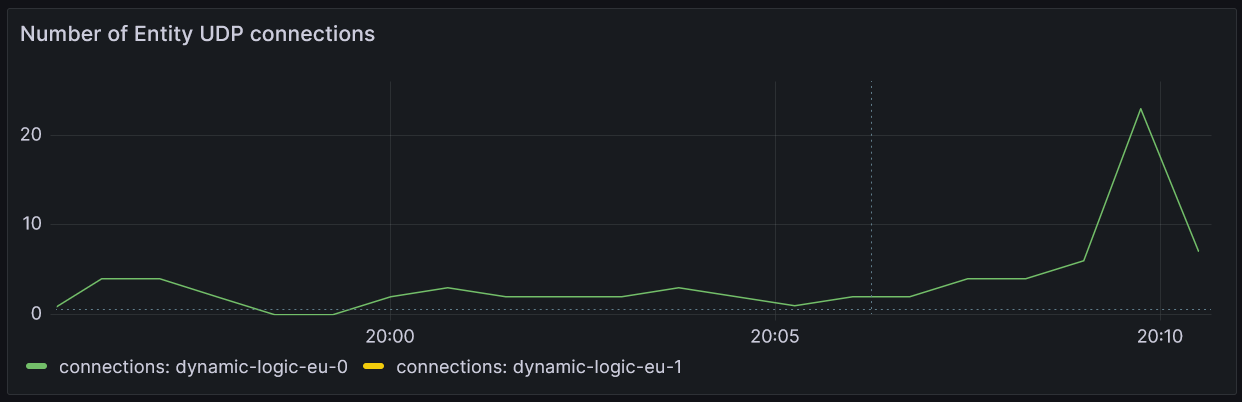

udp_table:add(5455,metaplay_dt_proto)A direct connection is an optional UDP-based communication channel the client may open in parallel to the normal connection. As such, the normal connection metrics do not include these channels. The current connection count can be inspected with the following query:

sum(game_directconnection_connections_total{namespace=~"$namespace"} - game_directconnection_connections_ended_total{namespace=~"$namespace"}) by (pod)